Modern hybrid-IT environments are monitored by numerous multi-vendor and multi-domain monitoring tools that generate humongous amounts of alerts and events that are not actionable. Meet the CloudFabrix Alert Watch that is A Digital Gatekeeper for all your IT Alerts & Events. Send all your alerts and events to CloudFabrix Alert Watch and get actionable alerts and incidents that are fully enriched, correlated and deduplicated to eliminate noise and lead to action.

Advantages of CloudFabrix Alert Watch

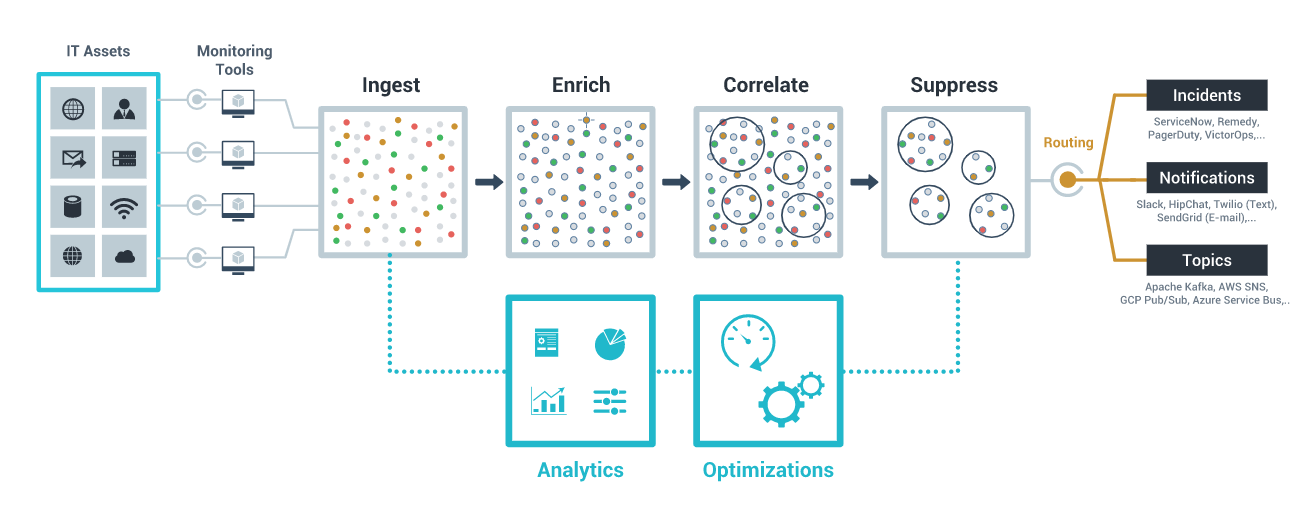

How does CloudFabrix Alert Watch work?

In an organization, there are a host of monitoring tools that monitor devices, servers, infrastructure, applications etc., these monitoring tools capture various events or alerts as and when it takes place. The ingestion of the events or alerts is done through a Webhook (an HTTP/REST endpoint). For event sources that do not have the ability to publish alerts or events on a Webhook, an event-consumer (message consumer or data poller) ingests the data. Events are majorly of the 3 types – alerts, incidents and collaboration messages.

The following broad steps are essentially needed to be able to ingest and process alerts from event sources:

- Create an event-source. This step creates the requisite sink (webhook etc.) for consuming the alerts from the source that can then be added to the source system for posting alerts.

- Create an event-bundle for interpreting and processing the event

- Add a mapper in the bundle to transform the raw event payload to an internal model that the system understands

- Add a pipeline to process the alert as it passes through the processing steps in the pipeline

Mappers convert the raw events from the source into a model that the system understands. Mappers understand each event from the sources ingested, using ‘event-bundles’. For every source the system supports, there’s a corresponding event bundle. An event bundle allows you to create the following type of resources,

- Mappers

- Processors

- Pipelines

Event Mapper

- An event mapper is used to manage the transformation of a raw alert payload, ingested from an alert-source, into an event model that the system understands.

Processor

- A processor is an execution step in the alert processing pipeline. A processor accepts the event model and adds useful information to enhance the event data.

Pipeline

- Once the event model is created, the event is routed through a pipeline. A pipeline is a sequence of processing steps that invoke a ‘processor’. Each processor accepts the incoming alert model and enhances the data. So a pipeline is essentially a sequence of enrichment steps.

- Each step is a process that converts the model of the event and enhances the model by adding supplementary information. Enrichment adds business context and attributes to the ingested event. In general, Enrichment is of 2 types,

- JITE – does correlation or clustering based on a specified attribute like Stack Information, cluster, etc.

- ACE Variable – symptom clustering like site domain data centre, etc. Creates a correlation group policy based on properties, attributes or variables.

How is Clustering Performed in CloudFabrix Alert Watch?

A process called Symptom based Clustering is performed on the Alerts generated. The use of advanced Machine Learning techniques called Unsupervised Learning is performed to group the various alerts into a single incident or various incidents based on the symptoms (pattern or variable). In the first step, the alert is pre-processed and stripped off of any unrelated parameters like IP address, time stamp, etc. which results in better clustering process.

What do we need for Clustering?

The pre-processed and enriched alerts are now vectorized using Vectorization techniques like Word2Vec, TFIDF, etc. Vectorization is a technique used to convert Data or Text into Machine Understandable Multidimensional Vectors. The vectorized data is sent through a dimensional reduction process to retrieve just the core information and drop the unnecessary data using methods like PCA (Principal Component Analysis) or LSA (Latent Semantic Analysis). Then the Vectorized data is sent through Unsupervised Machine Learning technique called HDB scan (Hierarchical Density Based Clustering) which effectively clusters the Alerts into their respective groups.

Clustering does not create a correlated group alert until instructed. Clustering or ML algorithms are used to create a Correlation Policy to club several alerts or events together as a single incident. The analytics engine deployed creates a Correlation group out of the umpteen events generated. All the alerts in a specified correlation group are categorized as a single incident. Correlation and Separation are two kinds of groups created based on Enriched attributes.

Separation is grouping of alerts and not creating an incident since the alerts are anticipated, e.g., planned system upgrade activity.

How does CloudFabrix Alert Watch use ML/AI Algorithms?

In the subsequent blogs we will discuss more advanced features available in CloudFabrix Alert Watch module that are designed to accelerate the adoption and improve operational efficiencies.

To learn more about CloudFabrix Alert Watch, visit AI for Alerts.