In the past year, the term Large Language Models (LLMs) and its models like OpenAI’s CHATGPT, Google’s Bard, Meta’s LLAMA-2, and the open-source Falcon have buzzed throughout the tech sphere. But what exactly is an LLM, and how does it function? Let’s dive in for a clearer insight.

The Core of LLM is Transformers

At the heart of LLMs is a neural network architecture called the Transformer. Introduced in a 2017 paper titled “Attention Is All You Need”, Transformers have become the go-to architecture for many NLP (Natural Language Processing) tasks.

The Transformer’s key innovation is the self-attention mechanism, which allows the model to weigh the significance of different words in a sentence relative to a given word. This provides a dynamic way to understand context.

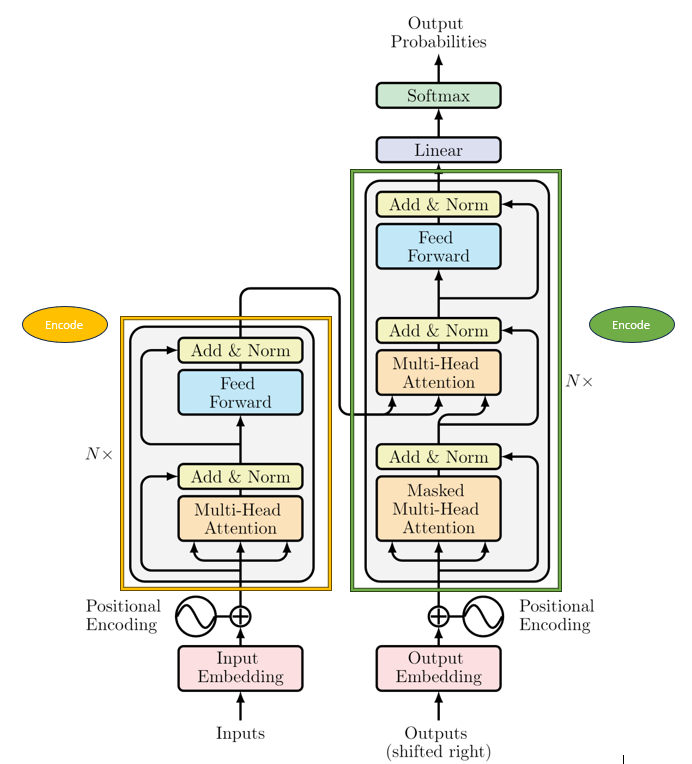

Key Components of a Transformer

Source: ‘Attention Is All You Need’ paper by Vaswani A

Embedding Layer: Converts input words (or tokens) into vectors of numbers. Think of it as mapping each word to a point in a high-dimensional space where semantically similar words are closer together.

Self-Attention Mechanism: For each word in a sentence, it determines which other words are important in understanding the context of that word. It assigns weights to these words, allowing the model to focus more on words that provide relevant context.

Feed-Forward Neural Networks: After the attention scores are obtained, the word representations pass through feed-forward networks to get the final representation for each word.

Positional Encodings: Since Transformers don’t have a built-in notion of the order of words, positional encodings are added to ensure the model knows the position of each word in a sequence.

Decoder: While GPT models only use the decoder portion of the Transformer for generation tasks, other models like BERT use both encoder and decoder for tasks like translation and summarization.

Training Process

LLMs are trained on vast amounts of text data. They learn to predict the next word in a sequence given context. For instance, given “The sky is ___”, the model might learn to predict “blue” as a probable next word. Through this training, the model learns about grammar, facts about the world, reasoning abilities, and even some biases present in the training data.

Tokenization

Before feeding text into the model, it’s broken down into chunks or “tokens”. These can be as short as one character or as long as one word or more. The model has a fixed maximum number of tokens it can handle in one go, often around 2048 for models like GPT-3.

Generating Outputs

When generating text, the model starts with an initial prompt and then predicts the next token, and the next, and so on, until it completes the generation or reaches a specified length.

The model’s outputs are based on probabilities. Given the context, it will choose the next token based on what it learned during training.

Let’s understand with an example

“Elephants are known for their ___.”

Imagine the blank is where the model needs to predict the next word.

Breaking Down the Sentence (Tokenization):

The model breaks down the sentence into chunks or “tokens”. Think of this like taking apart a LEGO structure into individual bricks.

“Elephants”, “are”, “known”, “for”, “their” and “___” become separate pieces.

Embedding – Turning Words into Numbers:

The model has a unique numerical representation for every word or token it knows. It’s like every LEGO brick having a unique ID number. The sentence is then converted into a sequence of numbers using these IDs.

Self-Attention – Understanding Context:

The model looks at each word and determines how important other words in the sentence are to understand its context. For instance, to fill in the blank, “Elephants” is crucial because we’re describing something about elephants. Words like “are” or “for” are less crucial in this context. Think of it as giving each LEGO brick a weight based on its importance in the overall structure.

Making a Prediction:

Using the context (the sentence so far) and what it has learned during its training, the model will guess the next word. In this case, it might predict “memory” or “trunks” or “size” to fill in the blank, because those are things commonly associated with elephants. Imagine now trying to fit the best LEGO piece in the spot where one is missing, based on the shape and design of the surrounding pieces.

Generating the Output:

The model then gives us the completed sentence, such as: “Elephants are known for their memory.” The best feature of LLMs like GPT is that they’ve seen so much text (like having played with millions of LEGO sets) that they can make educated guesses about what piece should come next, even in complex or unfamiliar structures. However, just like sometimes you might disagree with a friend about which LEGO piece fits best, the model’s predictions aren’t always perfect or what everyone would expect.

Conclusion

Just as humans learn from experiences, LLMs draw from vast textual data to understand and generate language. While their predictions can be astonishingly accurate, they, like us, aren’t infallible. As tools, their potential is immense, but the true magic lies in the harmony of human creativity paired with LLM capabilities.